Justin N. Wood and Samantha M. W. Wood

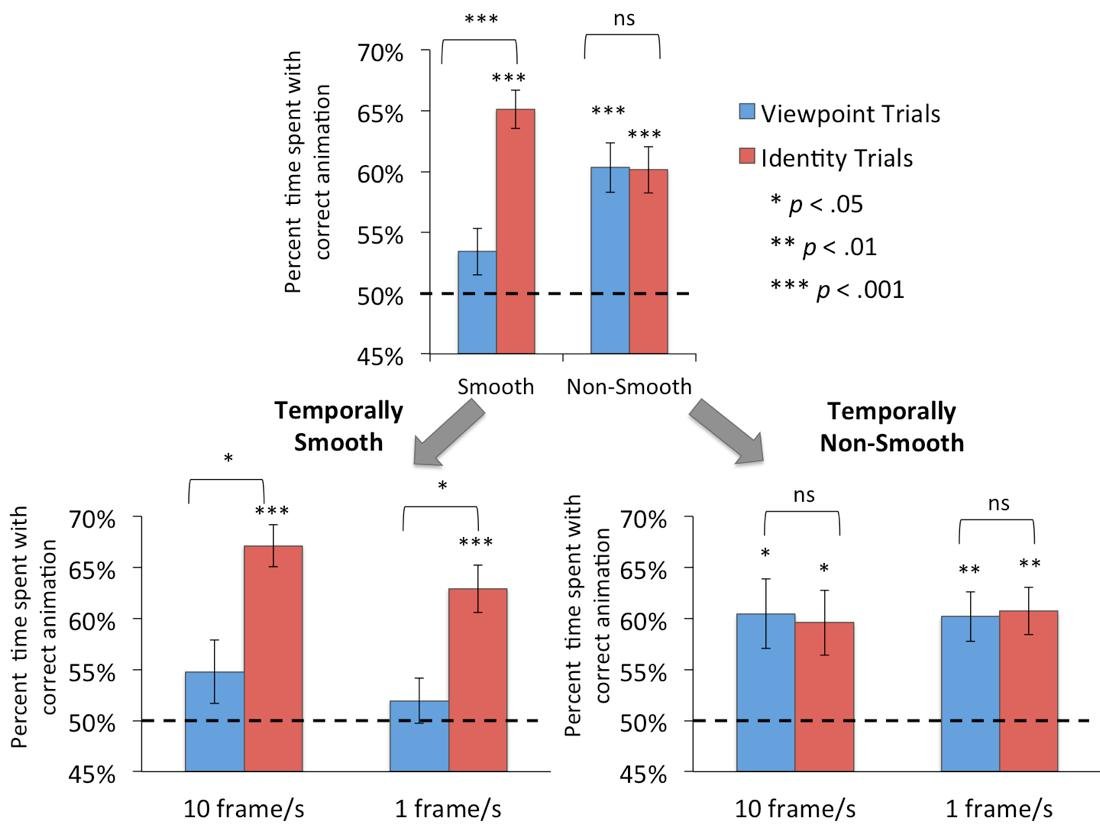

How do newborns learn to recognize objects? According to temporal learning models in computational neuroscience, the brain constructs object representations by extracting smoothly changing features from the environment. To date, however, it is unknown whether newborns depend on smoothly changing features to build invariant object representations. Here, we used an automated controlled-rearing method to examine whether visual experience with smoothly changing features facilitates the development of view-invariant object recognition in a newborn animal model—the domestic chick (Gallus gallus). When newborn chicks were reared with a virtual object that moved smoothly over time, the chicks created view-invariant representations that were selective for object identity and tolerant to viewpoint changes. Conversely, when newborn chicks were reared with a temporally non-smooth object, the chicks developed less selectivity for identity features and less tolerance to viewpoint changes. These results provide evidence for a ‘smoothness constraint’ on the development of invariant object recognition and indicate that newborns leverage the temporal smoothness of natural visual environments to build abstract mental models of objects.

(A) Illustration of a controlled-rearing chamber. The chambers contained no real-world objects. To present object stimuli to the chicks, virtual objects were projected on two display walls situated on opposite sides of the chamber. During the input phase (first week of life), newborn chicks were raised with a single virtual object moving smoothly or non-smoothly over time. Half of the chicks were raised with the object shown in panel (B) and half of the chicks were raised with the object shown in panel (C). The figures show successive images of the object presented to the chick.

The experimental procedure. These schematics illustrate how the virtual stimuli were presented for sample 4-h periods during the (A) input phase and (B) test phase. During the input phase, newborn chicks were reared with a single virtual object. The object appeared on one wall at a time (indicated by blue segments on the timeline), switching walls every 2 h, after a 1-min period of darkness (black segments). During the test phase, two object animations were shown simultaneously, one on each display wall, for 20 min per hour (gray segments). The illustrations below the timeline are examples of paired test objects displayed in four of the test trials. Each test trial was followed by a 40-min rest period (blue segments). During the rest periods, the animation from the input phase was shown on one display wall, and the other display wall was blank. These illustrations show the displays seen by the subjects raised with Imprinted Object 1 (Fig. 1). (C) Illustrations of a Viewpoint Trial and (D) an Identity Trial in the Temporally Smooth Condition.

SI Movie 1. A sample Rest Period showing Object 2 moving smoothly at a presentation rate of 10 fps.

SI Movie 2. A sample Rest Period showing Object 2 moving smoothly at a presentation rate of 1 fps.

SI Movie 3. A sample Rest Period showing Object 2 moving non-smoothly at a presentation rate of 10 fps.

SI Movie 4. A sample Rest Period showing Object 2 moving non-smoothly at a presentation rate of 1 fps.

SI Movie 5. A sample Viewpoint Trial showing Object 2 moving smoothly at a presentation rate of 10 fps.

SI Movie 6. A sample Viewpoint Trial showing Object 2 moving non-smoothly at a presentation rate of 1 fps.

SI Movie 7. A sample Identity Trial showing the objects moving smoothly at a presentation rate of 10 fps.

SI Movie 8. A sample Identity Trial showing the objects moving non-smoothly at a presentation rate of 1 fps.